Appearance

Softmax Classifier

用Softmax分类器解决多分类问题。

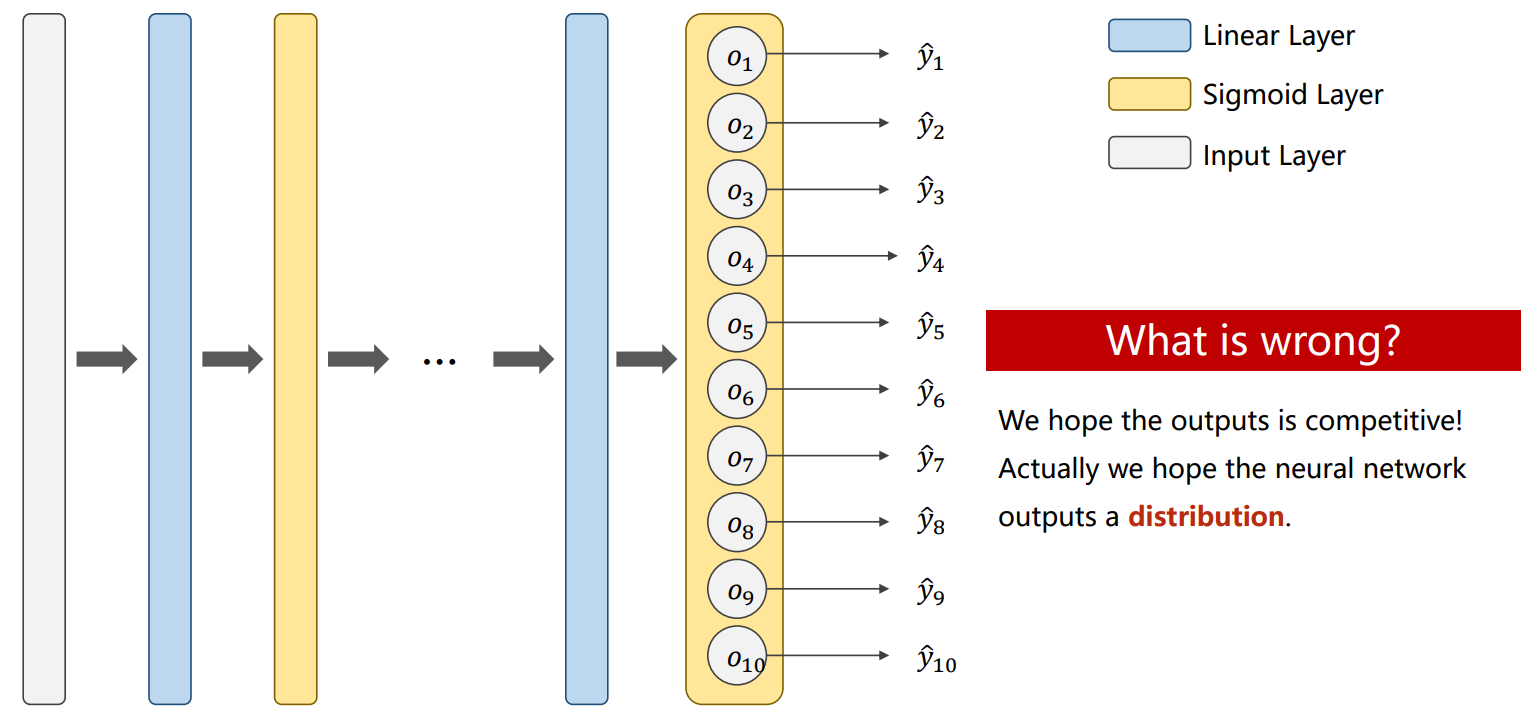

Design 10 Outputs using Sigmoid?

增加了输出的个数,这样就可以得到等于不同数字的概率。

这样对于每个数字概率的计算都是在二分类的情况下进行(是这个数与不是这个数),而实际上不同类别是存在相互约束的。

多分类问题输出的应该是一个分布(Distribution),每个类别的概率大于0且满足归一律。

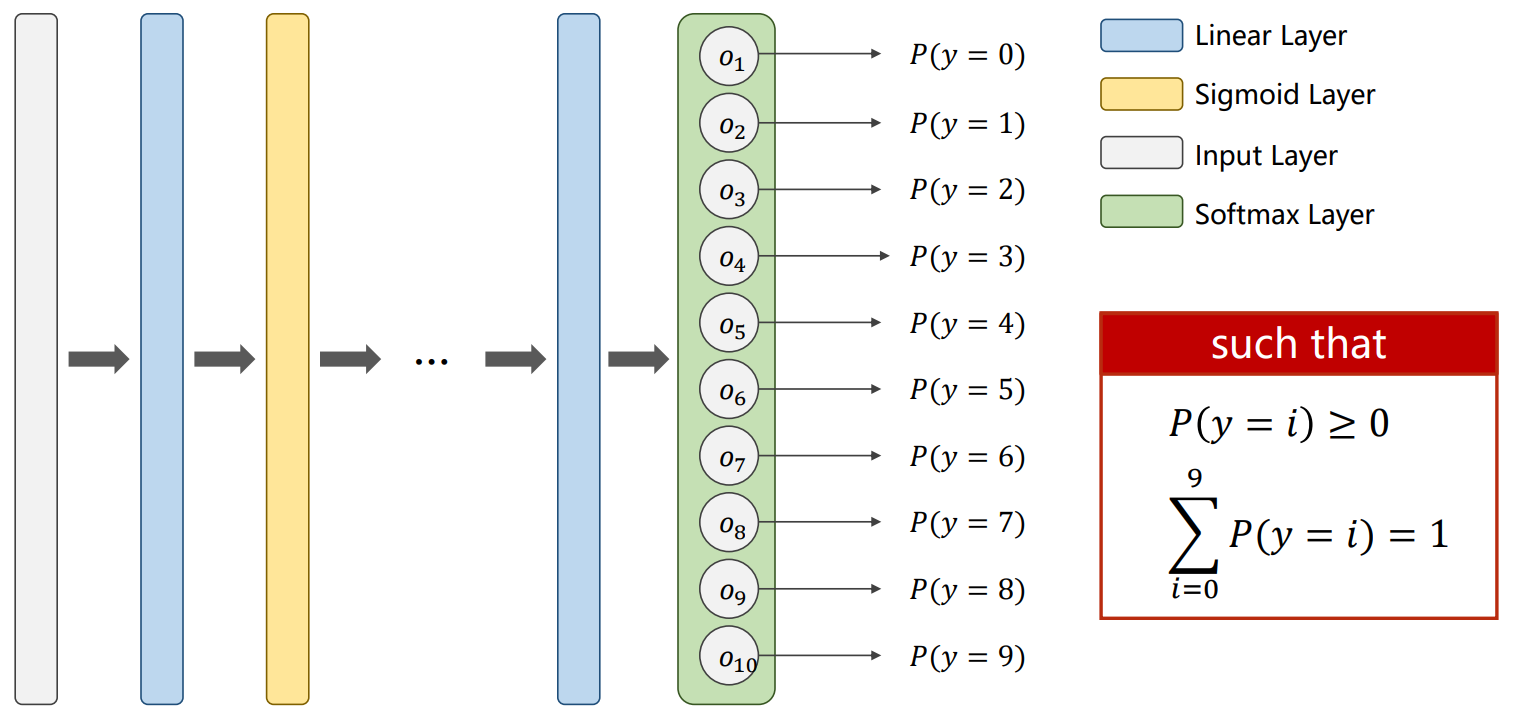

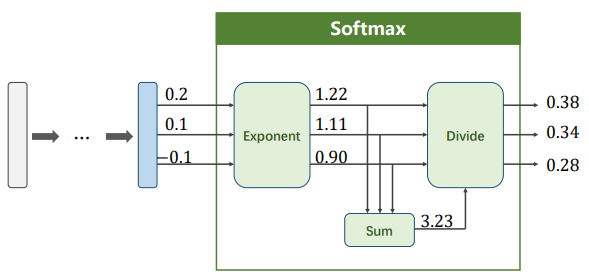

Output a Distribution of Prediction with Softmax

将最后层改为Softmax层。

假设是最后一个Linear layer的输出,Softmax函数如下:

一共有K个分类,使用指数运算可以保证结果大于0。

举例:

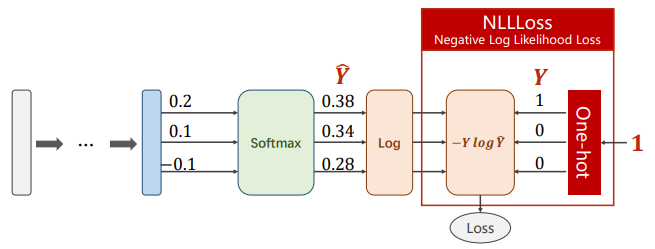

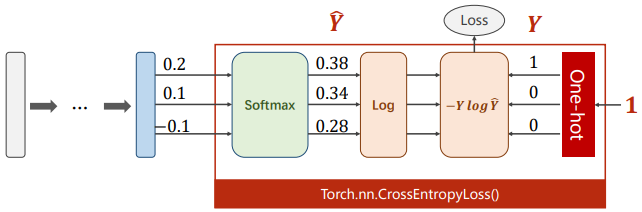

Loss Function - Cross Entropy

Cross Entropy in Numpy

import numpy as np

y = np.array([1, 0, 0])

z = np.array([0.2, 0.1, -0.1])

y_pred = np.exp(z) / np.exp(z).sum()

loss = (-y * np.log(y_pred)).sum()

print(loss)Cross Entropy in PyTorch

import torch

y = torch.nn.CrossEntropyLoss()

Y = torch.LongTensor([2, 0, 1])

Y_pred1 = torch.Tensor([[0.1, 0.2, 0.9], # 2

[1.1, 0.1, 0.2], # 0

[0.2, 2.1, 0.1]]) # 1

Y_pred2 = torch.Tensor([[0.8, 0.2, 0.3], # 1

[0.2, 0.3, 0.5], # 2

[0.2, 0.2, 0.5]]) # 2

l1 = criterion(Y_pred1, Y)

l2 = criterion(Y_pred2, Y)

print("Batch Loss1 =", l1.data, "\nBatch Loss2 =", l2.data)可以看出第一个预测比较准确,实际损失如下:

- Batch Loss1 = tensor(0.4966)

- Batch Loss2 = tensor(1.2389)

Tips

PyTorch里面也有Softmax、LogSoftmax和NLLLoss模块。

CrossEntropyLoss模块是包含Softmax模块的,所以最后一层不做激活。

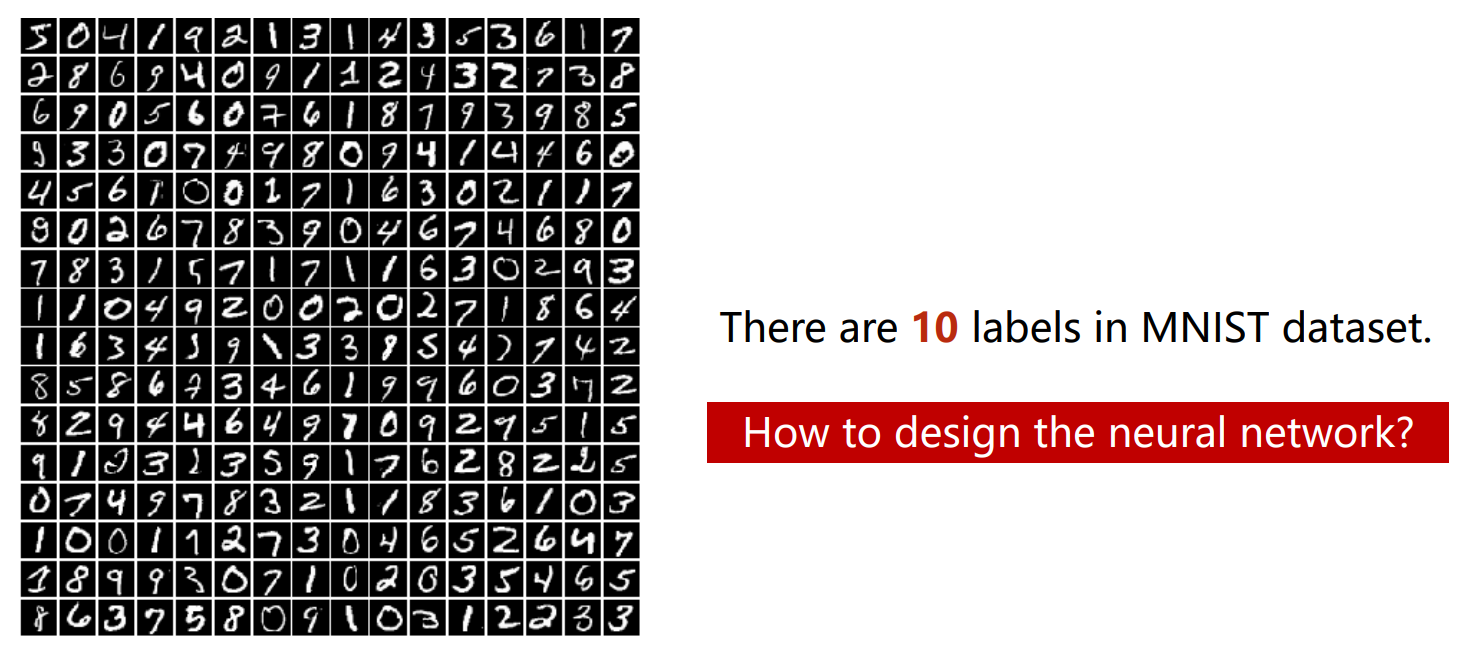

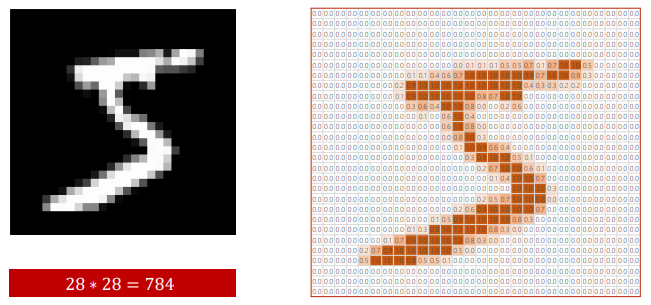

Back to MNIST Dataset

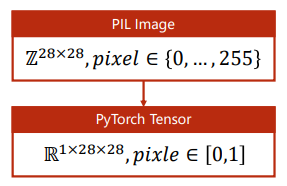

MNIST数据集里面是的大小的图片,每个像素点按照明暗程度对应[0,255]的数字,将[0,255]映射到[0,1)之间就可以得到右图。

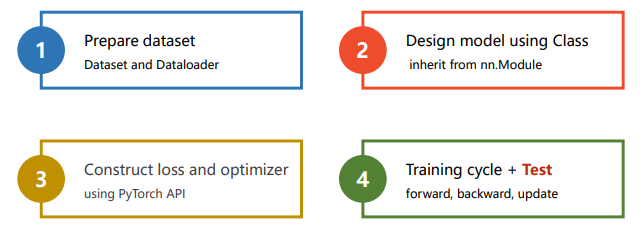

Implementation of classifier to MNIST dataset

相比于之前的四个步骤,增加了测试。

0. Import Package

import torch

from torchvision import transforms

from torchvision import datasets

from torch.utils.data import DataLoader

import torch.nn.functional as F

import torch.optim as optim1. Prepare Dataset

batch_size = 64

transform = transforms.Compose([

transforms.ToTensor(), # Convert the PIL Image to Tensor

transforms.Normalize((0.1307, ), (0.3081, ))

])

train_dataset = datasets.MNIST(root='../dataset/mnist',

train=True,

transform=transform,

download=True)

train_loader = DataLoader(dataset=train_dataset,

batch_size=batch_size,

shuffle=True)

test_dataset = datasets.MNIST(root='../dataset/mnist',

train=False,

transform=transform,

download=True)

test_loader = DataLoader(dataset=test_dataset,

batch_size=batch_size,

shuffle=False)如何处理PIL Image?

,1是指通道灰度图片只有1个通道,两个28分别是指宽和高。

Tips

神经网络喜欢[-1,1]之间的输入。

transforms.ToTensor(),先转化为张量transforms.Normalize((0.1307, ), (0.3081, )),归一化,第一个是均值(mean),第二个是标准差(std)

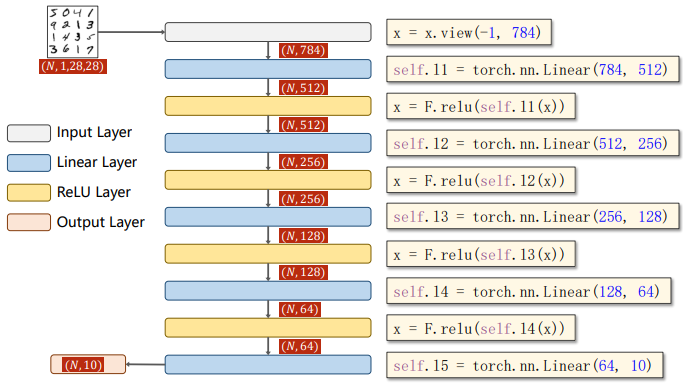

2. Design Model

注意:

- 激活层改用更常见的ReLU

- 最后一个输出层不做激活

- 全连接神经网络要求输入是一个矩阵,而样本图片是三阶的张量,需要通过拼接(reshape)变成1阶张量

class Net(torch.nn.Module):

def __init__(self):

super().__init__()

self.l1 = torch.nn.Linear(784, 512)

self.l2 = torch.nn.Linear(512, 256)

self.l3 = torch.nn.Linear(256, 128)

self.l4 = torch.nn.Linear(128, 64)

self.l5 = torch.nn.Linear(64, 10)

def forward(self, x):

x = x.view(-1,784)

x = F.relu(self.l1(x))

x = F.relu(self.l2(x))

x = F.relu(self.l3(x))

x = F.relu(self.l4(x))

return self.l5(x)

model = Net()3. Construct Loss and Optimizer

criterion = torch.nn.CrossEntropyLoss()

optimizer = optim.SGD(model.parameters(), lr=0.01, momentum=0.5)4. Train and Test

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

optimizer.zero_grad()

# forward + backward + updata

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 ==299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

image, labels = data

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total +=labels.size(0)

correct += (predicted == labels).sum().item

print('Accuracy on test set: %d %%' % (100 * correct / total))

if __name__ == '__main__':

for epoch in range(10):

train(epoch)

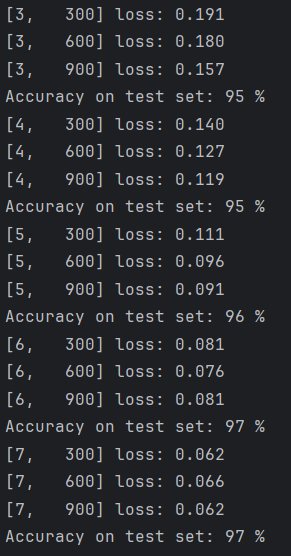

test()结果: