Appearance

Basic CNN

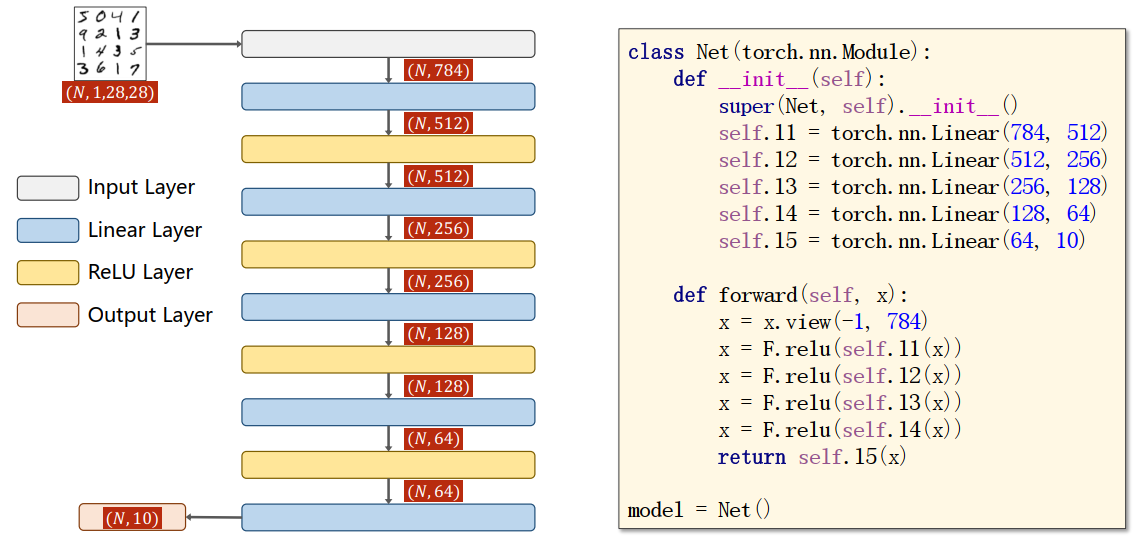

Revision: Fully Connected Neural Network

Fully Connected Neural Network:全连接神经网络

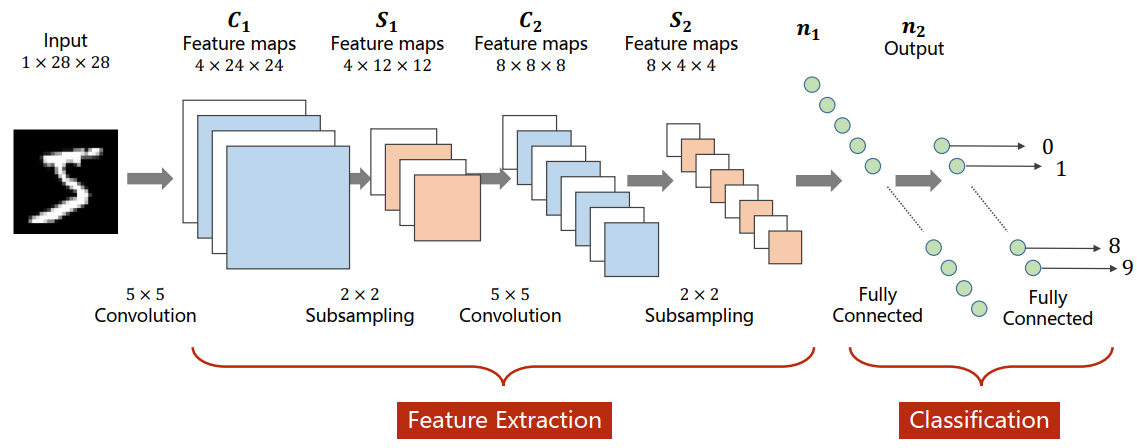

Convolutional Neural Network

Convolutional Neural Network:卷积神经网络

全连接神经网络直接将图像拼接处理成一阶的张量,原本相邻的两个点可能不再相邻,图像失去了空间信息。

而卷积神经网络能够保留原始的空间信息。

特征提取(Feature Extraction):卷积(Convolution)、下采样(Subsampling),得到向量

分类(Classification):全连接网络

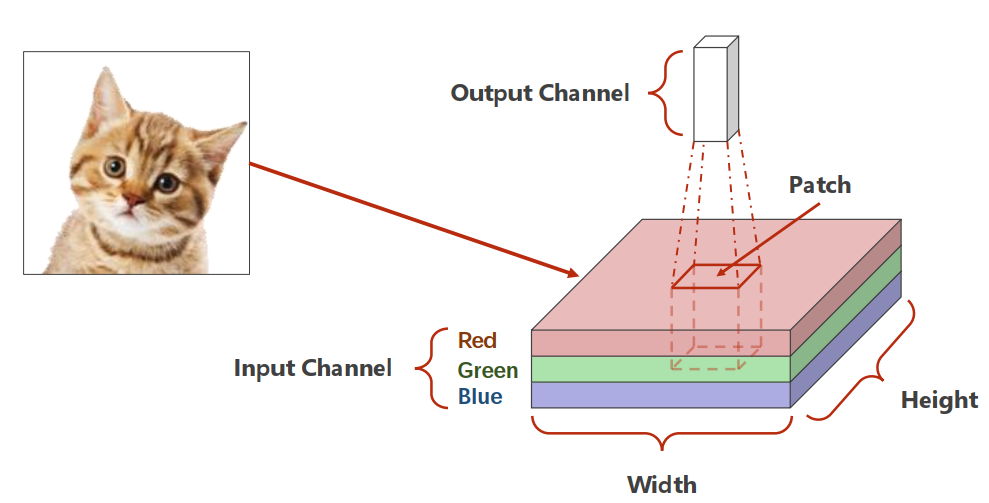

Convolution

栅格图片的形式:

对图像的一个Patch做卷积得到Output Channel,Channel、Height和Width都可能发生改变。

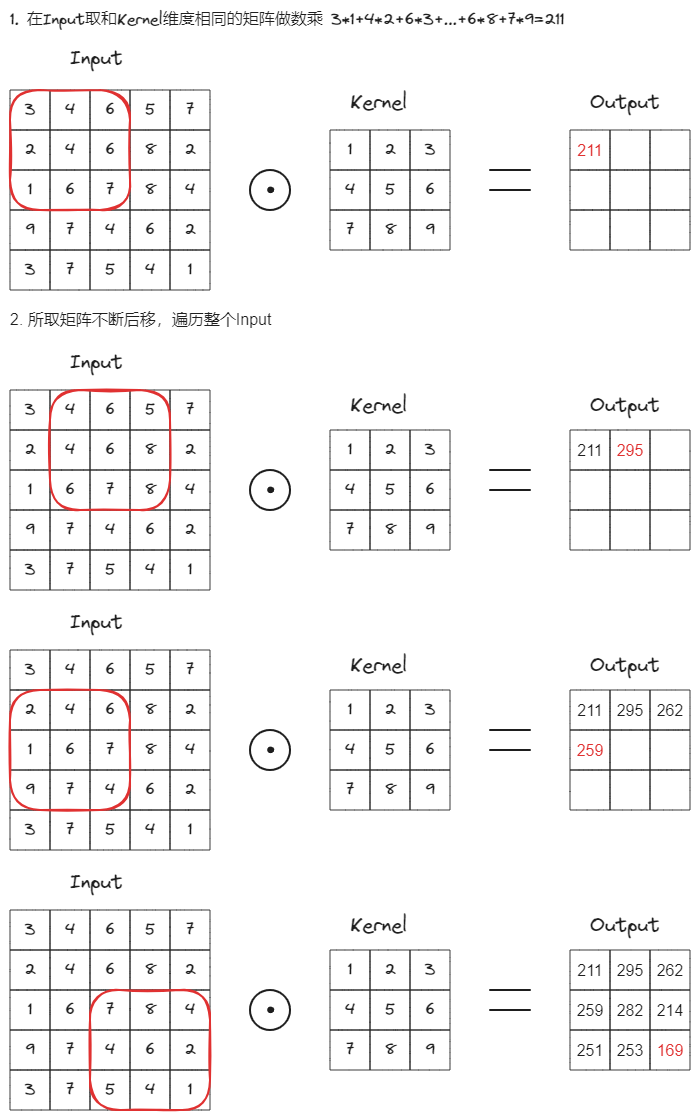

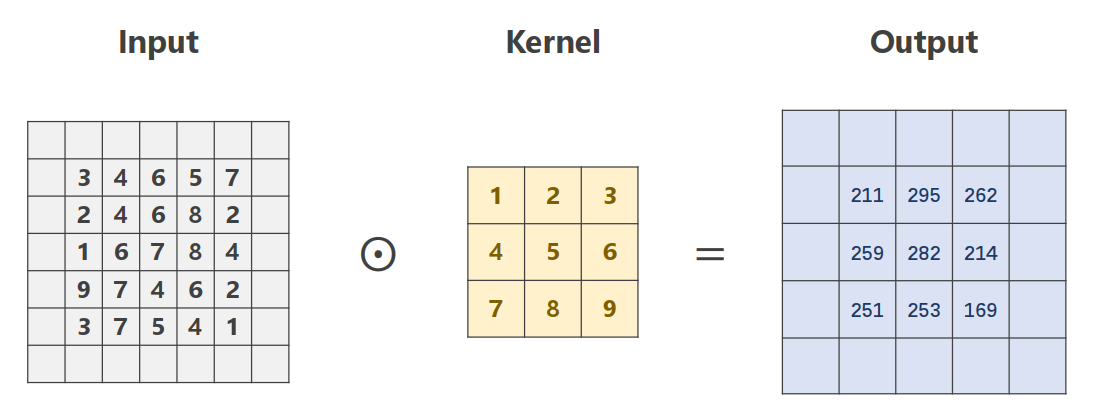

Single Input Channel

单通道卷积的具体过程过程:

输入一个的图像,使用的卷积核

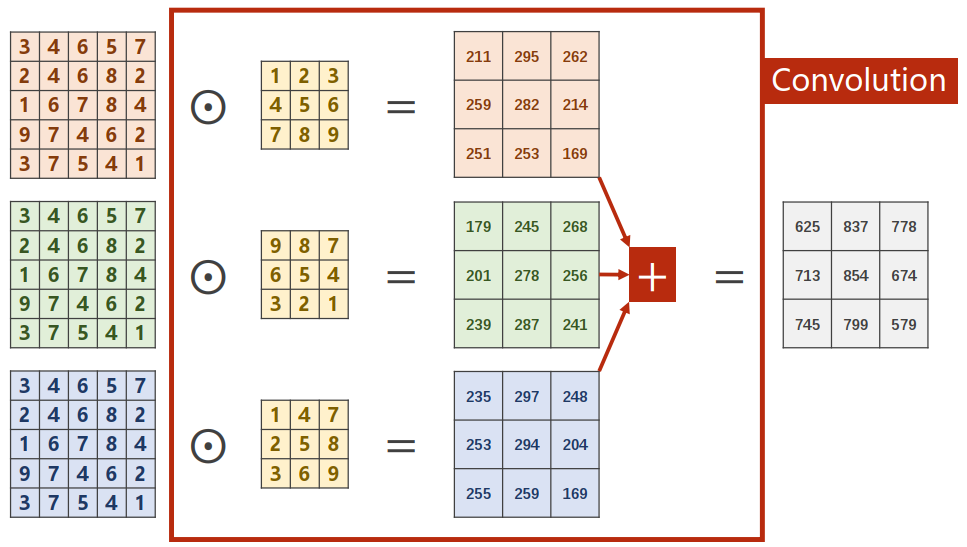

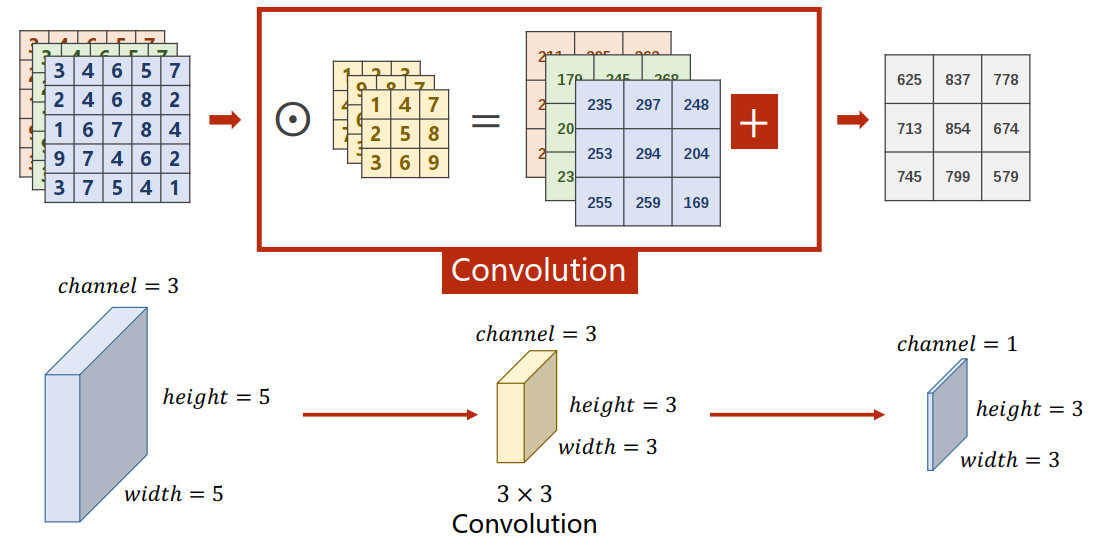

3 Input Channels

三通道卷积,每个通道各与一个卷积核卷积结果相加。

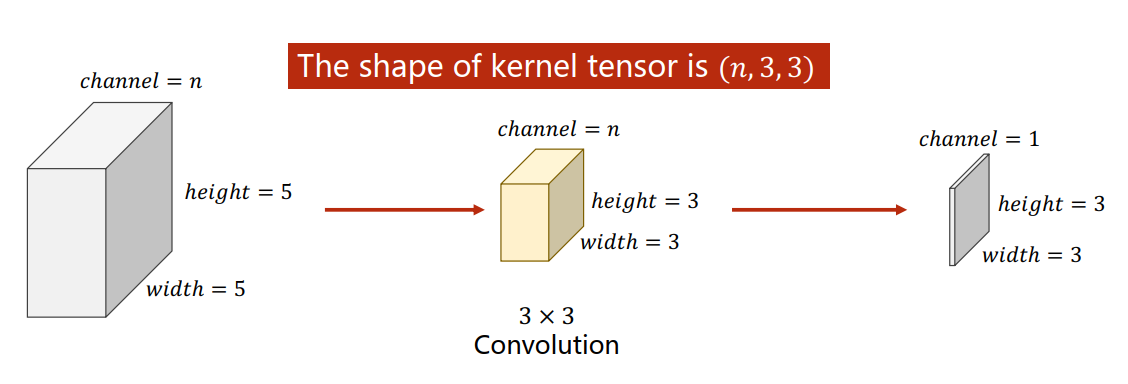

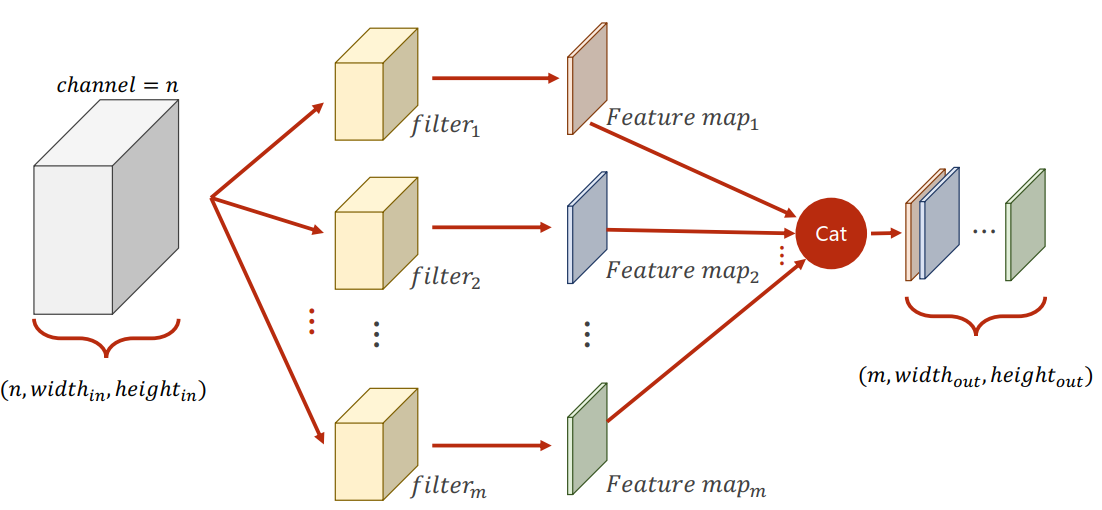

N Input Channels

有几个输入通道卷积核就要有几个通道。

需要m个输出通道,就需要有m个卷积核。

Convolution Layer

输入:

输出:

需要个的卷积核。

可以把这个卷积核拼成一个四维的张量。

import torch

in_channels, out_channels = 5, 10

width, height = 100, 100

kernel_size = 3

batch_size = 1

input = torch.randn(batch_size,

in_channels,

width,

height)

conv_layer = torch.nn.Conv2d(in_channels,

out_channels,

kernel_size=kernel_size)

output = conv_layer(input)

print(input.shape)

print(output.shape)

print(conv_layer.weight.shape)输出结果:

torch.Size([1, 5, 100, 100])

torch.Size([1, 10, 98, 98])

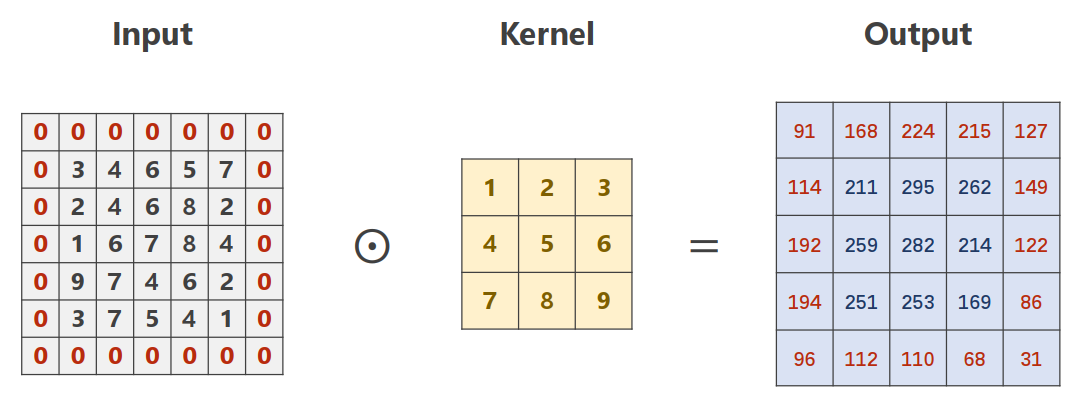

torch.Size([10, 5, 3, 3])padding

padding=1

import torch

input = [3, 4, 6, 5, 7,

2, 4, 6, 8, 2,

1, 6, 7, 8, 4,

9, 7, 4, 6, 2,

3, 7, 5, 4, 1]

input = torch.Tensor(input).view(1, 1, 5, 5)

conv_layer = torch.nn.Conv2d(1, 1, kernel_size=3, padding=1, bias=False)

kernel = torch.Tensor([1,2,3,4,5,6,7,8,9]).view(1, 1, 3, 3)

conv_layer.weight.data = kernel.data

output = conv_layer(input)

print(output)Tips

要维持输入输出的宽和高不发生改变,则padding=kernel_size/2(下取整)

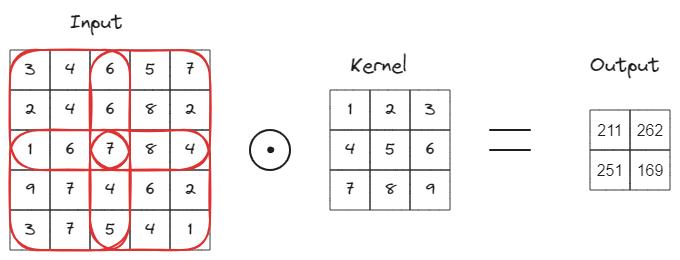

stride

步长,stride=2

import torch

input = [3, 4, 6, 5, 7,

2, 4, 6, 8, 2,

1, 6, 7, 8, 4,

9, 7, 4, 6, 2,

3, 7, 5, 4, 1]

input = torch.Tensor(input).view(1, 1, 5, 5)

conv_layer = torch.nn.Conv2d(1, 1, kernel_size=3, stride=2, bias=False)

kernel = torch.Tensor([1,2,3,4,5,6,7,8,9]).view(1, 1, 3, 3)

conv_layer.weight.data = kernel.data

output = conv_layer(input)

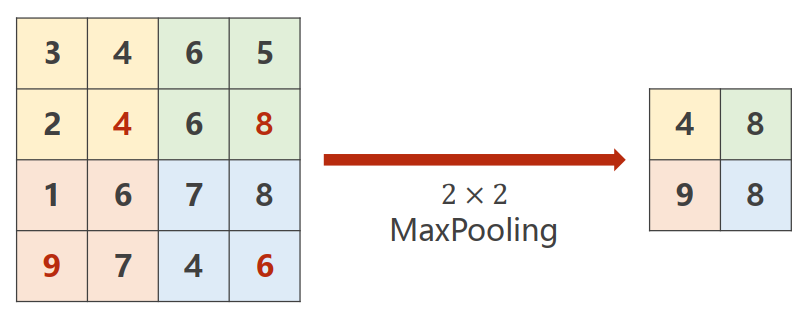

print(output)Subsampling: Max Pooling Layer

分组,然后在每组里找最大值,再拼接。

通道数不会变化。

import torch

input = [3, 4, 6, 5,

2, 4, 6, 8,

1, 6, 7, 8,

9, 7, 4, 6,

]

input = torch.Tensor(input).view(1, 1, 4, 4)

maxpooling_layer = torch.nn.MaxPool2d(kernel_size=2)

output = maxpooling_layer(input)

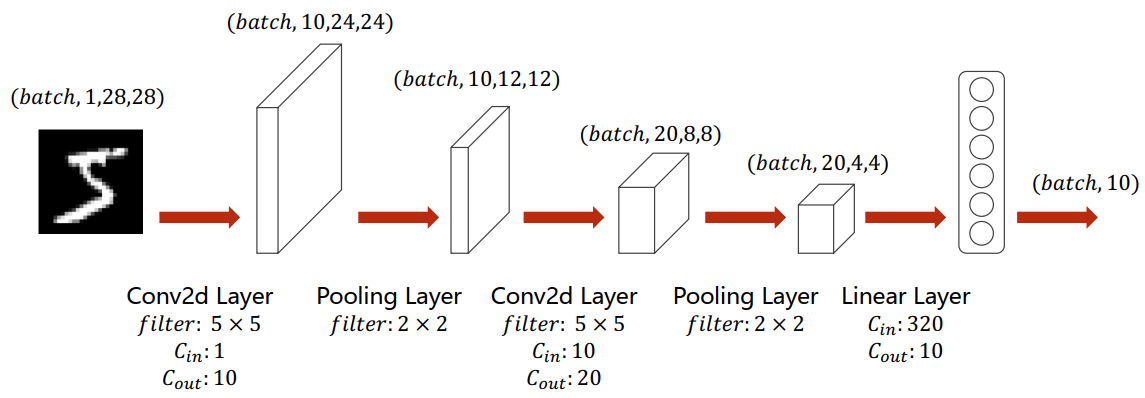

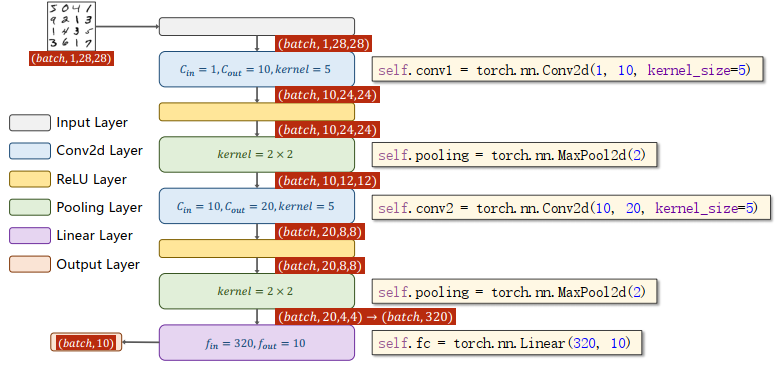

print(output)A Simple Convolutional Neural Network

class Net(torch.nn.Module):

def __init__(self):

super().__init__()

self.conv1 = torch.nn.Conv2d(1, 10, kernel_size=5)

self.conv2 = torch.nn.Conv2d(10, 20, kernel_size=5)

self.pooling = torch.nn.MaxPool2d(2)

self.fc = torch.nn.Linear(320, 10)

def forward(self, x):

# Flatten data from (n, 1, 28, 28) to (n, 784)

batch_size = x.size(0)

x = F.relu(self.pooling(self.conv1(x))) # 第一组:卷积、池化、激活

x = F.relu(self.pooling(self.conv2(x))) # 第二组:卷积、池化、激活

x = x.view(batch_size, -1) # flatten

x = self.fc(x) # 全连接

return x把上一节代码的模型替换成这个模型即可。

How to Use GPU

1. Move Model to GPU

- Define device as the first visible cuda device if we have CUDA available.

- Convert parameters and buffers of all modules to CUDA Tensor.

model = Net()

device = torch.device('cuda:0' if torch.cuda.is_available() else 'cpu')

model.to(device)2. Move Tensors to GPU

用来计算的张量也要迁移到GPU(要和Model在同一个显卡)。

def train(epoch):

running_loss = 0.0

for batch_idx, data in enumerate(train_loader, 0):

inputs, target = data

inputs, target = inputs.to(device), target.to(device) # 新增

optimizer.zero_grad()

# forward + backward + updata

outputs = model(inputs)

loss = criterion(outputs, target)

loss.backward()

optimizer.step()

running_loss += loss.item()

if batch_idx % 300 == 299:

print('[%d, %5d] loss: %.3f' % (epoch + 1, batch_idx + 1, running_loss / 300))

running_loss = 0.0

def test():

correct = 0

total = 0

with torch.no_grad():

for data in test_loader:

images, labels = data

images, labels = images.to(device), labels.to(device) # 新增

outputs = model(images)

_, predicted = torch.max(outputs.data, dim=1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

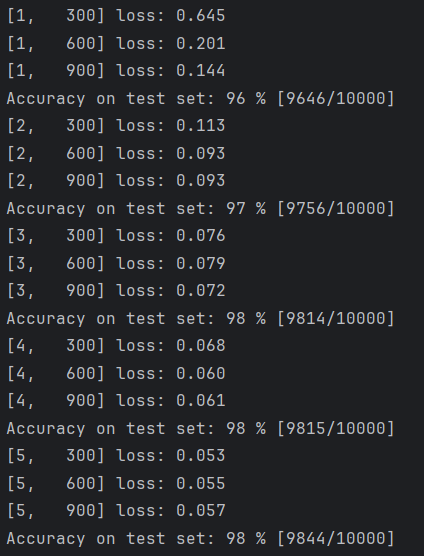

print('Accuracy on test set: %d %% [%d/%d]' % (100 * correct / total, correct, total))Results